Program As Weights

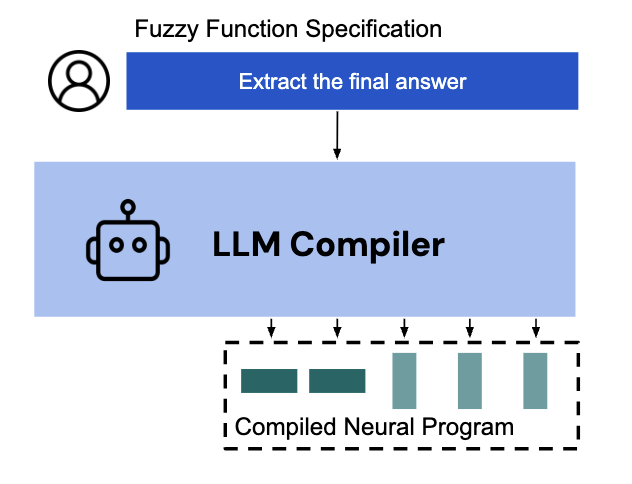

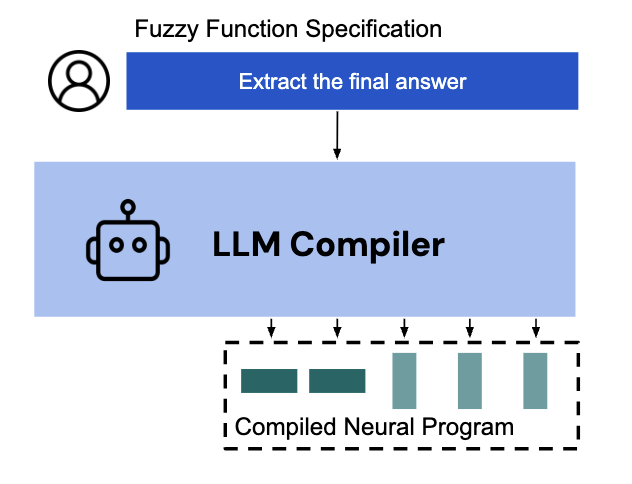

Research on representing programs as neural network weights, exploring novel approaches to program synthesis and neural architecture design.

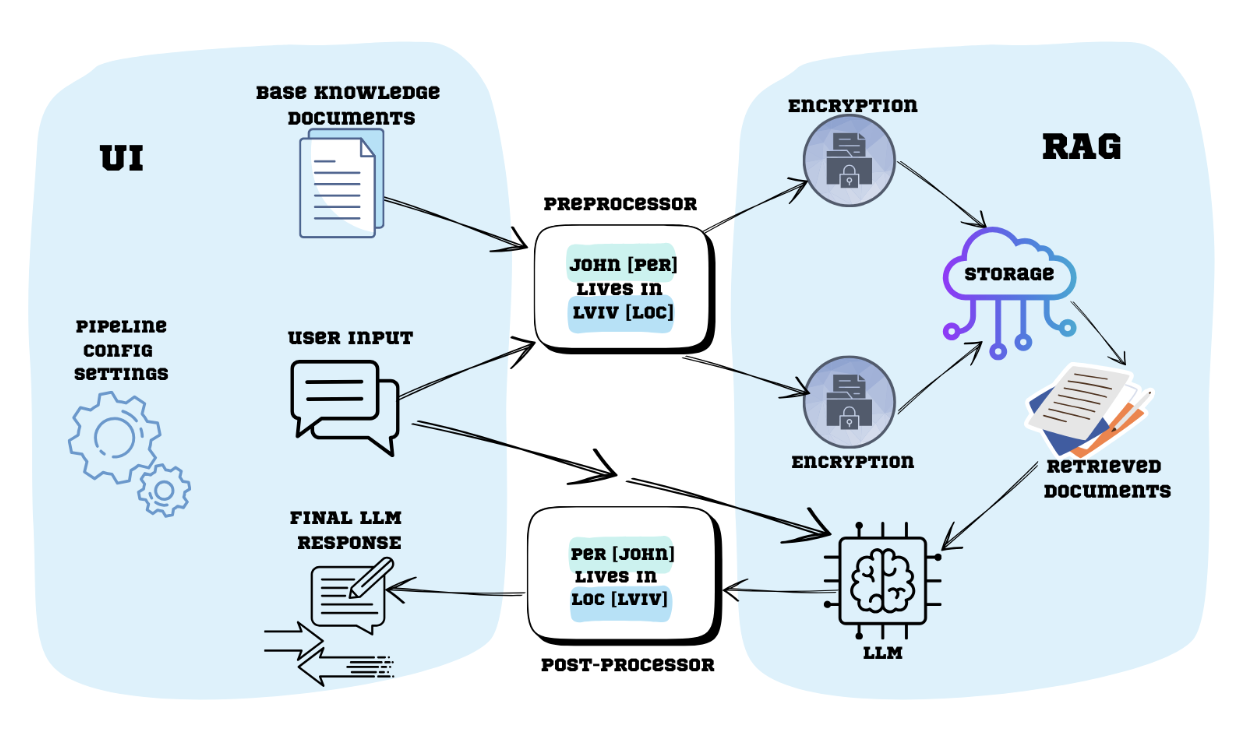

LLMs in Software Engineering • LoRA/HyperLoRA • Agent Systems • Privacy‑Preserving NLP

I am a Graduate Computer Science student at the University of Waterloo, supervized by Yuntian Deng. My research interests include Large Language Models, privacy‑preserving NLP, and agent systems.

Waterloo, ON, Canada · Open to research collaborations and internships.

Research on representing programs as neural network weights, exploring novel approaches to program synthesis and neural architecture design.

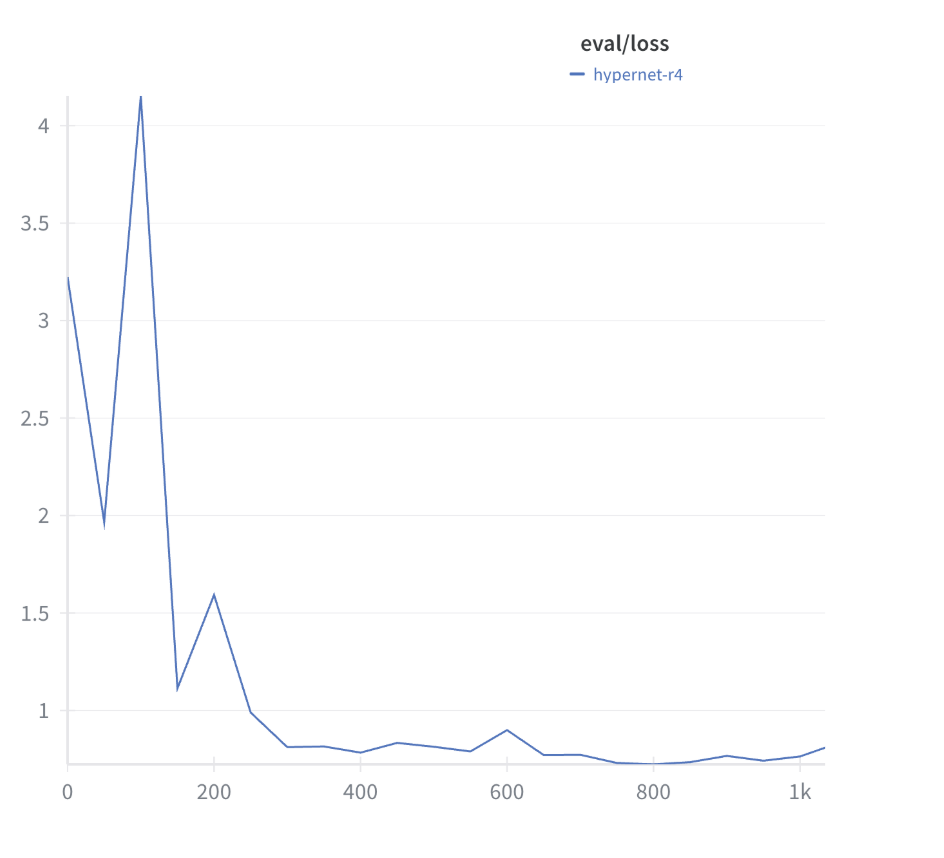

Repository‑level QA fine‑tuning with custom masking and GPU memory profiling of LoRA, full FT, and a hypernetwork that generates LoRA weights. Focus on stable training & reproducibility.

Email: lhotsko@uwaterloo.ca · GitHub: lilianahotsko · LinkedIn: Liliana Hotsko